Our experience from numerous R&D efforts suggests that due to the ever-increasing value of Data, data integration is most often viewed as a substitute for system or application integration. We believe this may not always be the case.

During the 1970s organizations began using multiple databases, to exploit aggregated business information. The term ETL has been coined to describe an extended family of technologies that support processes stringed together to 'Extract' data from information sources; 'Transform' and convert data from one form to another; and, 'Load' i.e. write data to a target data store or data warehouse.

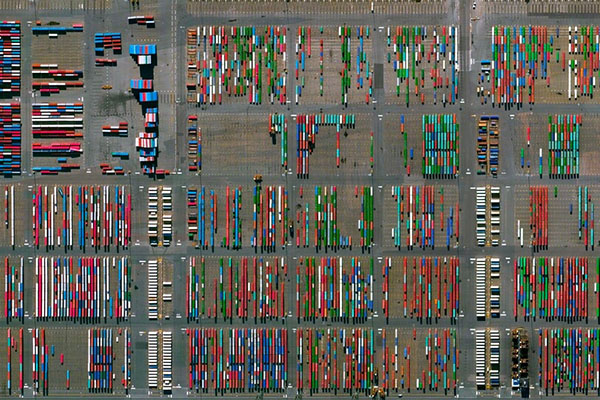

In the era of Big Data, ETL and its descendant Data Warehousing technologies, have been reinvented due to the omnipresence of data feeds, made available by various public and enterprise applications and also by the overwhelming network and application connectivity capacity that Web 3.0 is beginning to capitalize. To make things more complex, the rapid growth of the Internet of Things (IoT) is acting as a multiplier of the data streaming, processing and controlling features of modern software solutions.

This eruption of data aggregation capabilities has led many decision makers to consider data integration as the silver-bullet solution sufficing the expectations of end-users and the business requirements of product road mapping and business innovation.

This realization is understandable, given that data pipelining is a very common practice in Big Data and also that more and more modern applications strongly rely upon real-time data streams manipulation (including many of the well-worn social media applications we all ceaselessly use) to deliver unpreceded levels of value creation.

Still, system and application integration require more than just that. They demand a direct link of all entangled heterogeneous IT systems at a functional level. Application integration is therefore very much a real-time sharing of transaction information and requires some sort of controlled architecture development strategy.

Our experiences from Research and Innovation Development projects suggest that the integration of digital technology aiming at fundamentally changing value creation in public and commercial application settings, would require a much broader IT development strategy. One that equally accounts for business, information and delivery requirements. Enterprise Architecture (EA) methodologies and the resulting integration design patterns have been proven successful in providing solutions that can incorporate systems that were never meant to work together. This is especially important for innovation that transcends different IT ecosystems and stakeholder interests.

With the correct approach, EA can help new product research and innovation development to utilize Big Data techniques that maximize return on investment. In this setting, architects need to scrutinize the business capabilities they need to deliver and develop a plan for systems and applications integration in which small proof-of-concept implementations validate whether big data may be of actual value. Once this impact is validated, the process can be scaled up.

Therefore, by following a more agile approach during the specification of the software architecture, new business and innovation-led developments can harness the advanced capabilities of Big Data technologies and also deliver engaging and effective software applications that better respond and capitalize on the ever-evolving opportunities of the modern markets.

Author: George Koutalieris.